Have you ever dreamed about scraping the shockmagazin.hu and load it’s album reviews to PostgreSQL?

This post is for you.

Steps - Short version

Step 1.

Step 2.

Step 3.

Steps - longer version

Step 1.

Let’s see our source shockmagazin.hu - CD reviews, and examine a section’s URL.

http://www.shockmagazin.hu/component/abc/?view=alphabetical&task=A§ionid=14&catid=77

As we can see the categories are appearing as URL parameter, so if we had a list from categories then we would be happy.

*The entirely pshell script is available on Github (❤️)

Step 1.1 - Define the categories

I chose powershell for webscraping, because I am familiar with it, but your decision can be differ from it for example phantomJS 1 or python, there are enourmous number of utility and method.

$urlCategory = @('0-9','A','B','C','D','E','F','G','H','I','J','K','L','M','N','O','P','Q','R','S','T','U','V','W','X','Y','Z')

$link = 'http://www.shockmagazin.hu/component/abc/?view=alphabetical&task='

We created an array $urlCategory that holds our category list and a variable $link with the base url.

Step 1.2 - loop through links then loop though links…

Next we are going to start loop the category list, that holds many links, so we loop the category page as well. Our pseudo code is ought to seem like this:

lisr[category]

storageForItems

loop in categories

loop in album links

store it's data in storageForItems

if it's an album review

write to disk

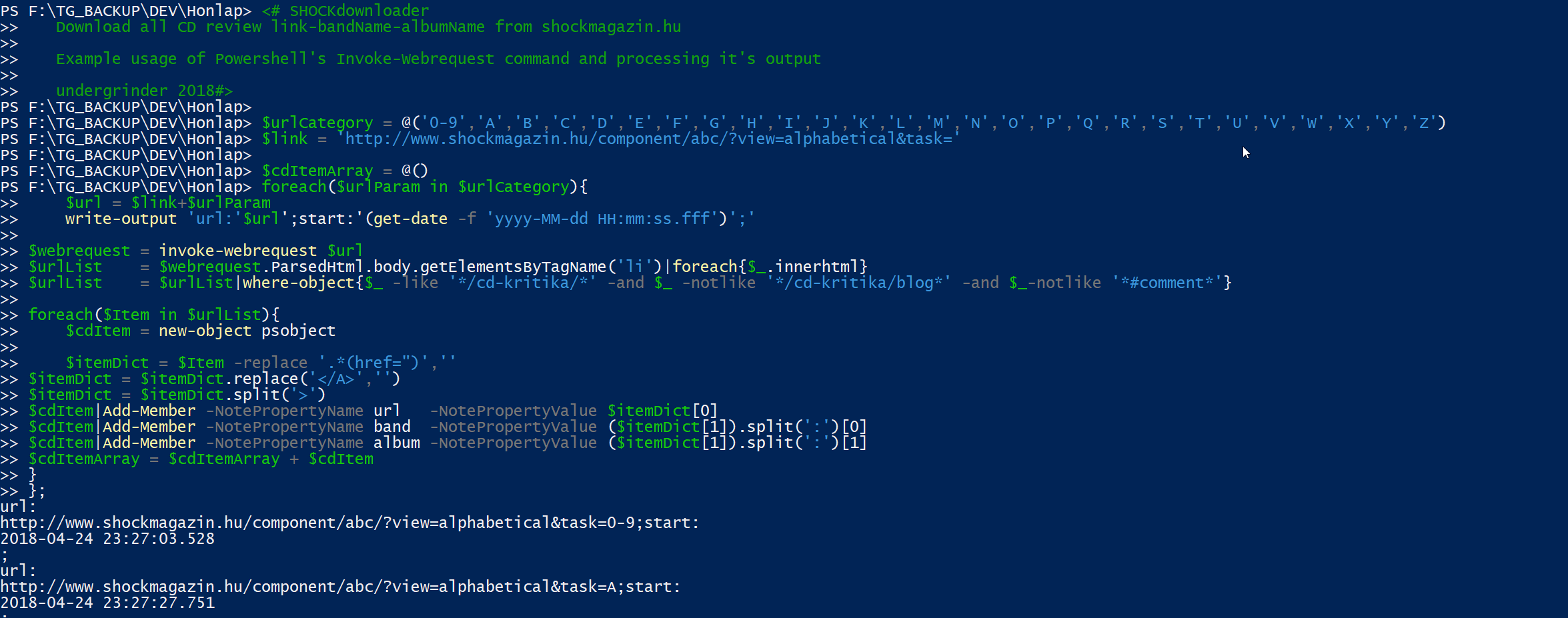

To implement our pseudo code we use pshell’s Invoke-Webrequest, this cmdlet returns a .net object with many properties and methods (COOL 😎).

-1. Create the storageForItems, that will hold a custom powershell object$cdItemArray = @(). It is empty so far.

-

Start a loop

foreach($urlParam in $urlCategory) - Create the url request

$url = $link+$urlParam - Get the data with Invoke-Webrequest cmdlet

$webrequest = invoke-webrequest $url - Get the url list. Our $webrequest object has a ParsedHTML property, it’s represent the html document even better we can query it’s content. Since the <li> tag holds the content we interested in, we query these tags with getElementById, afterward filter this list, so our new

$urlListarray holds only the album reviews link.

$urlList = $webrequest.ParsedHtml.body.getElementsByTagName('li')|foreach{$_.innerhtml}

$urlList = $urlList|where-object{$_ -like '*/cd-kritika/*' -and $_ -notlike '*/cd-kritika/blog*' -and $_-notlike '*#comment*'}

- Loop again - but this time in album links on the current category page.

Step 1.3 - comprehend the result

Example2

<a href="/cd-kritika/cannibal-corpse-torture">Cannibal Corpse: Torture</a>

The result is found above, notice it’s contains three kind of information

- Album URL - between html tags

- Band name - at the start of the tag contents

- Album title - in the tag content, after the semicolon

#main splitting

$itemDict = $Item -replace '.*(href=")',''

$itemDict = $itemDict.replace('</A>','')

$itemDict = $itemDict.split('>')

#add the first part to our object

$cdItem|Add-Member -NotePropertyName url -NotePropertyValue $itemDict[0]

#seconder split and assign to object

$cdItem|Add-Member -NotePropertyName band -NotePropertyValue ($itemDict[1]).split(':')[0]

$cdItem|Add-Member -NotePropertyName album -NotePropertyValue ($itemDict[1]).split(':')[1]

Please do not forget this $cdItem object add to our $cdItemArray array.

$cdItemArray = $cdItemArray + $cdItem

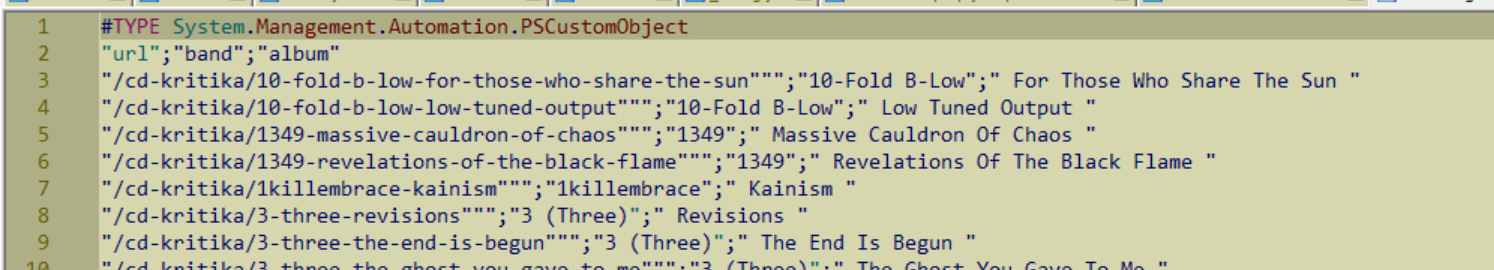

Step 1.4 - Write data to disk

$cdItemArray|export-csv shockmagazin.csv -delimiter ';'

A little tailoring is needed like remove the first line, and trim the quotes, it’s necessity depends on the capabilities of the next processing step’s tool (ignore first x lines, set the text separator etc.)

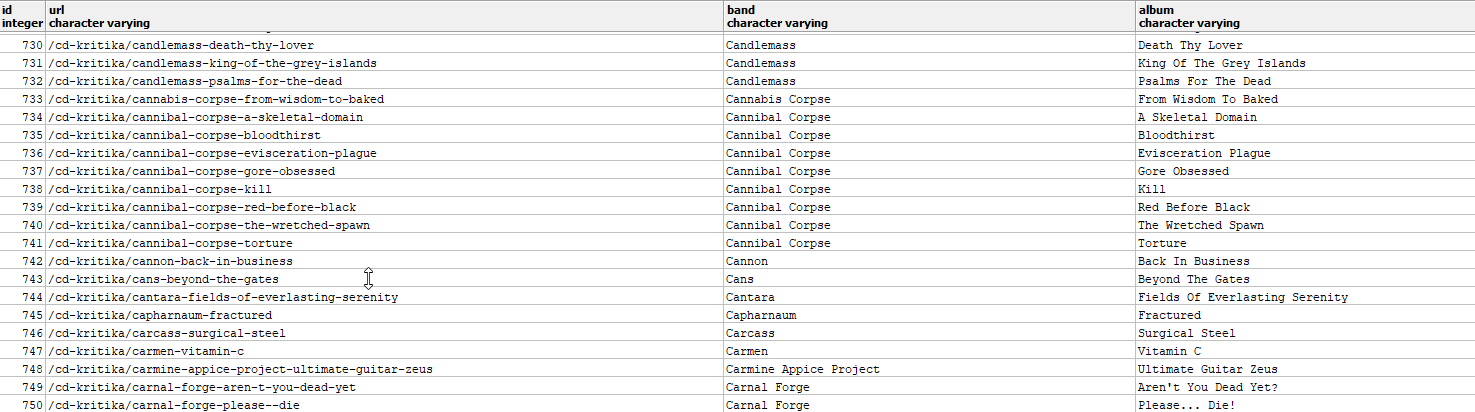

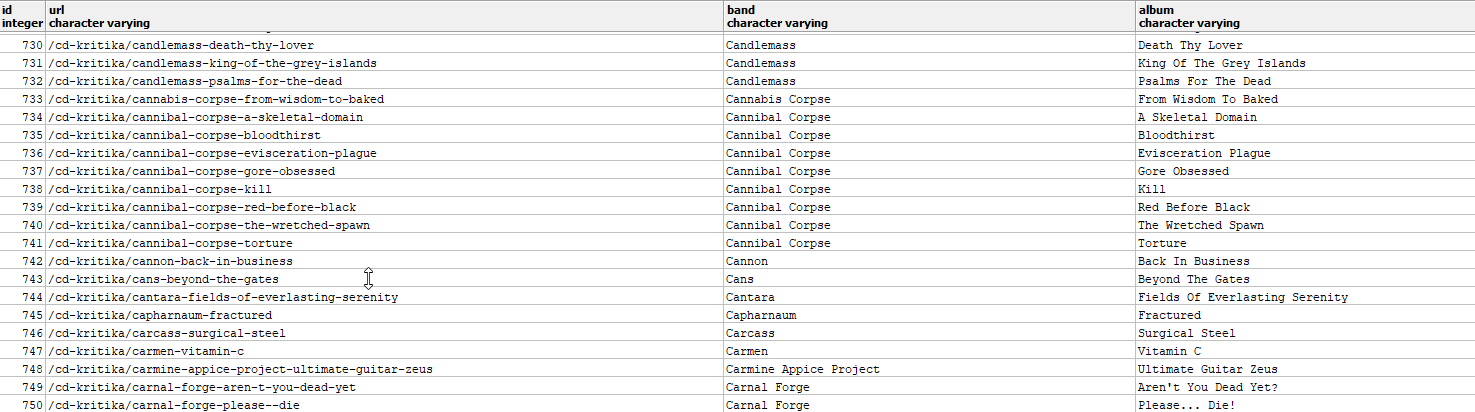

Sample from script execution

Step 2. - skippable

Step 3. - store our data in database

The previous section we store a csv file on hard disk, but like a cool guys we like our data in database. I chose PostgreSQL for this task, because it is open source without any license pitfalls (sure SQLite suits this particular post case, I know, and I ❤️ SQLite)

Step 3.1 - Create table

CREATE TABLE shockinfo

(id serial primary key,

url character varying,

band character varying,

album character varying);

Step 3.2 - Read the CSV

I Assume, that out csv file is well formatted, remoced the first line etc…etc…etc… so we can settle the data into the database, where data should belong to.

-

Open command line and type

psql -U [youruser], if the path variable for psql is set correctly, you can type it anywhere in your filesystem -

In psql execute the right command

\copy shockinfo(url,band,album) from shockmagazin.csv (DELIMITER ';');

Next steps -homework

We gathered and ingest some information from the web. If you are willing to dive deeper and develop the script feel free, it’s downloadable from Github (❤️). The next steps can be iterate on album links, and extract information about the albums, e.g. rating, or sniff other additional information from somewhere else, like metal-archives.com.

If you liked this post, or stucked/succeced/inspirred/inspirritationed leave a message below.

Have fun!🐷

Comments